How to Use Terraform with GCP and Twingate

This guide provides step-by-step instructions on automating Twingate deployments with Terraform on Google Cloud Platform.

Getting Started - What we are going to build

Production Environments

The information in this article should be used as a guide only. If you are deploying this method into a production environment, we recommend that you also follow all security and configuration best practices.

Images and code samples in this guide may contain references to specific versions of software or container images that may not be the latest versions available. Please refer to the official documentation for that software or container image for the most up-to-date information.

The goal of this guide is to use Terraform to deploy Twingate on a Google Cloud Platform (GCP) VPC including all required components (Connector, Remote Network) and Configuration Items (Resource, Group, etc.):

mkdir twingate_gcp_democd twingate_gcp_demoAll commands below will be run from within the folder created, we can now open this folder in our editor of choice.

Storing Terraform Code

For simplicity and readability all the code will be included in a single main.tf file. For more information on Terraform code structure, please see this guide and this guide.

Setting Up the Terraform Providers

On Terraform Providers

A Terraform Provider is a module that leverages an external API (like the Twingate API) and makes certain functions available right from within Terraform without having to know anything about the external API itself.

First let’s setup the provider configuration: create a new file called main.tf (amending each value to match your environment/requirements):

terraform { required_providers { twingate = { source = "twingate/twingate" version = "0.1.10" }

}}

provider "google" { project = "twingate-projects" region = "europe-west2" zone = "europe-west2-c"}Twingate Terraform Provider

The latest Terraform Provider for Twingate is always available here.

We need 2 providers in this case: One for Twingate (it will allow us to create and configure Twingate specific Configuration Items) and one for GCP (it will allow us to spin up the required infrastructure / VPC).

Once this is in place we can run the following command to download and install those providers locally on your system:

terraform initYou should see the provider plugins being initialized and installed successfully:

Initializing the backend...

Initializing provider plugins...- Finding twingate/twingate versions matching "0.1.10"...- Finding latest version of hashicorp/google...- Installing twingate/twingate v0.1.10...- Installed twingate/twingate v0.1.10 (self-signed, key ID E8EBBE80BA0C9369)- Installing hashicorp/google v4.30.0...- Installed hashicorp/google v4.30.0 (signed by HashiCorp)

Partner and community providers are signed by their developers.If you'd like to know more about provider signing, you can read about it here:https://www.terraform.io/docs/cli/plugins/signing.html

Terraform has created a lock file .terraform.lock.hcl to record the providerselections it made above. Include this file in your version control repositoryso that Terraform can guarantee to make the same selections by default whenyou run "terraform init" in the future.

Terraform has been successfully initialized!GCP Credentials

This guide assumes you have credentials setup to connect to GCP, if not you can find details of how to do this on the official Terraform page here.

Creating the Twingate infrastructure

To create the Twingate infrastructure we will need a way to authenticate with Twingate. To do this we will create a new API key which we will use with Terraform.

In order to do so: Navigate to Settings → API then Generate a new Token:

You will need to set your token with Read, Write & Provision permissions, but you may want to restrict the allowed IP range to only where you will run your Terraform commands from.

Click on generate and copy the token.

Terraform vars file

Like all programming languages, terraform can use variables to define values. Let’s create a new file called terraform.tfvars, we will use it to define useful variables.

Add the following lines to this file, adding in the value of the API Token into tg_api_key and the name of your Twingate tenant. (The tenant is the mycorp part of the URL to your Twingate Console if the full URL was https://mycorp.twingate.com):

tg_api_key="Copied API key"tg_network="mycorp"Using Source Control

If you are using or thinking of using source control, please ensure you exclude this file for security reasons. (You don’t want your API tokens visible in clear text on a public repository!)

We can then add these variables to the main.tf file:

variable "tg_api_key" {}variable "tg_network" {}And reference these in the provider config (no change needed):

provider "twingate" { api_token = var.tg_api_key network = var.tg_network}Now we can start creating resources in Twingate. Let’s first start with the highest level concept, the Twingate Remote Network:

resource "twingate_remote_network" "gcp_demo_network" { name = "gcp demo remote network"}Some clarification on what is happening here:

- resource does NOT refer to a Twingate Resource but means a resource in the Terraform sense

- twingate_remote_network refers to the type of object we are creating as defined by the Twingate Terraform provider

- gcp_demo_network is a unique ID we are specifying in order to be able to reference this object when doing other things (like creating a Connector attached to this newly created Remote Network)

- gcp demo remote network is the actual name used to name our newly created Remote Network (and is the name visible in the admin console)

Let’s now create the Connector:

resource "twingate_connector" "gcp_demo_connector" { remote_network_id = twingate_remote_network.gcp_demo_network.id}Some clarification here as well:

- twingate_connector refers to a Connector as per the Terraform provider for Twingate

- remote_network_id is the only parameter required to create a Connector: this is consistent with creating a connector from the Admin Console: you need to attach it to a remote network.

- twingate_remote_network.gcp_demo_network.id is read as

<Terraform resource type>.<Terraform resource name>.<internal ID of that object>

And finally generating the Tokens we will use when setting up the Connector:

resource "twingate_connector_tokens" "twingate_connector_tokens" { connector_id = twingate_connector.gcp_demo_connector.id}It’s a good idea at this point to do a quick check on our Terrform script by running:

terraform planterraform plan is a non destructive command: it runs a simulation of what Terraform needs to do: it is therefore safe to run and is useful towards troubleshooting your code.

You should see a response similar to this:

Terraform will perform the following actions:

# twingate_connector.gcp_demo_connector will be created + resource "twingate_connector" "gcp_demo_connector" { + id = (known after apply) + name = (known after apply) + remote_network_id = (known after apply) }

# twingate_connector_tokens.twingate_connector_tokens will be created + resource "twingate_connector_tokens" "twingate_connector_tokens" { + access_token = (sensitive value) + connector_id = (known after apply) + id = (known after apply) + refresh_token = (sensitive value) }

# twingate_remote_network.gcp_demo_network will be created + resource "twingate_remote_network" "gcp_demo_network" { + id = (known after apply) + name = "gcp demo remote network" }

Plan: 3 to add, 0 to change, 0 to destroy.If this is consistent with what you are seeing, we can then move onto doing the same for the GCP infrastructure needed.

Creating the GCP Infrastucture

Networking

First lets create a new VPC network by adding the following to our code:

resource "google_compute_network" "demo_vpc_network" { name = "twingate-demo-network" auto_create_subnetworks = false

}Then we can create a subnet within the new VPC:

resource "google_compute_subnetwork" "demo_subnet" { name = "twingate-demo-subnetwork" ip_cidr_range = "172.16.0.0/24" network = google_compute_network.demo_vpc_network.id

}Then we can create a firewall to allow traffic on port 80 within this subnet only:

resource "google_compute_firewall" "default" { name = "firewall" network = google_compute_network.demo_vpc_network.id

allow { protocol = "tcp" ports = ["80"] }

source_ranges = [google_compute_subnetwork.demo_subnet.ip_cidr_range]}Virtual Machines

First we can create the webserver VM, install Nginx and set a nice homepage!

resource "google_compute_instance" "vm_instance_webserver" { name = "twingate-demo-webserver" machine_type = "e2-micro"

boot_disk { initialize_params { image = "projects/ubuntu-os-cloud/global/images/family/ubuntu-2204-lts" } }

network_interface {

network = google_compute_network.demo_vpc_network.id subnetwork = google_compute_subnetwork.demo_subnet.id access_config { } }

metadata_startup_script = <<EOF

#!/bin/bash

apt-get -y update apt-get -y install nginx

echo " <style> h1 {text-align: center;}

</style>

<h1>TERRAFROM 💙 TWINGATE </h1>

" > /var/www/html/index.html

service nginx start

sudo rm -f index.nginx-debian.html

EOF

}Next we can create the Twingate connector VM and install Twingate with connection details created earlier:

We will be using a Terraform template file so we can inject our values created earlier for the Twingate installer.

Create a new folder called template and within this folder create a new file called twingate_client.tftpl.

Within this new file paste the following:

#!/bin/bash

curl "https://binaries.twingate.com/connector/setup.sh" | sudo TWINGATE_ACCESS_TOKEN="${accessToken}" TWINGATE_REFRESH_TOKEN="${refreshToken}" TWINGATE_URL="https://${tgnetwork}.twingate.com" bashThen back in the main.tf file, we can create the new VM for the Twingate connector:

resource "google_compute_instance" "vm_instance_connector" { name = "twingate-demo-connector" machine_type = "e2-micro"

boot_disk { initialize_params { image = "projects/ubuntu-os-cloud/global/images/family/ubuntu-2204-lts" } }

network_interface {

network = google_compute_network.demo_vpc_network.id subnetwork = google_compute_subnetwork.demo_subnet.id access_config {

} } metadata_startup_script = templatefile("${path.module}/template/twingate_client.tftpl", { accessToken = twingate_connector_tokens.twingate_connector_tokens.access_token, refreshToken = twingate_connector_tokens.twingate_connector_tokens.refresh_token, tgnetwork = var.tg_network })

}Finally we need to create a new Twingate Group and add in the new resource for the web server we just created:

Create a Twingate Group:

resource "twingate_group" "gcp_demo" { name = "gcp demo group"}Create a New Twingate resource:

resource "twingate_resource" "resource" { name = "gcp demo web sever resource" address = google_compute_instance.vm_instance_webserver.network_interface.0.network_ip remote_network_id = twingate_remote_network.gcp_demo_network.id group_ids = [twingate_group.gcp_demo.id] protocols { allow_icmp = true tcp { policy = "RESTRICTED" ports = ["80"] } udp { policy = "ALLOW_ALL" } }}As you can see, this is restricting access to port 80. You will want to alter this depending on the application you are using.

Your final scripts should look like this:

Finished Scripts

terraform { required_providers { twingate = { source = "twingate/twingate" version = "0.1.10" }

}}

variable "tg_api_key" {}variable "tg_network" {}

provider "google" { project = "twingate-projects" region = "europe-west2" zone = "europe-west2-c"}

provider "twingate" { api_token = var.tg_api_key network = var.tg_network}

# Create a new remote network in Twingateresource "twingate_remote_network" "gcp_demo_network" { name = "gcp demo remote network"}

# Create a new twingate connectorresource "twingate_connector" "gcp_demo_connector" { remote_network_id = twingate_remote_network.gcp_demo_network.id}

# Create the tokens for the new connectorresource "twingate_connector_tokens" "twingate_connector_tokens" { connector_id = twingate_connector.gcp_demo_connector.id}

resource "google_compute_network" "demo_vpc_network" { name = "twingate-demo-network" auto_create_subnetworks = false

}

resource "google_compute_subnetwork" "demo_subnet" { name = "twingate-demo-subnetwork" ip_cidr_range = "172.16.0.0/24" network = google_compute_network.demo_vpc_network.id

}

resource "google_compute_firewall" "default" { name = "firewall" network = google_compute_network.demo_vpc_network.id

allow { protocol = "tcp" ports = ["80"] }

source_ranges = [google_compute_subnetwork.demo_subnet.ip_cidr_range]}

resource "google_compute_instance" "vm_instance_webserver" { name = "twingate-demo-webserver" machine_type = "e2-micro"

boot_disk { initialize_params { image = "projects/ubuntu-os-cloud/global/images/family/ubuntu-2204-lts" } }

network_interface { # A default network is created for all GCP projects network = google_compute_network.demo_vpc_network.id subnetwork = google_compute_subnetwork.demo_subnet.id access_config { } }

metadata_startup_script = <<EOF

#!/bin/bash

apt-get -y update apt-get -y install nginx

echo " <style> h1 {text-align: center;}

</style>

<h1>TERRAFROM 💙 TWINGATE </h1>

" > /var/www/html/index.html

service nginx start

sudo rm -f index.nginx-debian.html

EOF

}

resource "google_compute_instance" "vm_instance_connector" { name = "twingate-demo-connector" machine_type = "e2-micro"

boot_disk { initialize_params { image = "projects/ubuntu-os-cloud/global/images/family/ubuntu-2204-lts" } }

network_interface { # A default network is created for all GCP projects network = google_compute_network.demo_vpc_network.id subnetwork = google_compute_subnetwork.demo_subnet.id access_config {

} } metadata_startup_script = templatefile("${path.module}/template/twingate_client.tftpl", { accessToken = twingate_connector_tokens.twingate_connector_tokens.access_token, refreshToken = twingate_connector_tokens.twingate_connector_tokens.refresh_token, tgnetwork = var.tg_network })

}

resource "twingate_group" "gcp_demo" { name = "gcp demo group"}

resource "twingate_resource" "resource" { name = "gcp demo web sever resource" address = google_compute_instance.vm_instance_webserver.network_interface.0.network_ip remote_network_id = twingate_remote_network.gcp_demo_network.id group_ids = [twingate_group.gcp_demo.id] protocols { allow_icmp = true tcp { policy = "RESTRICTED" ports = ["80"] } udp { policy = "ALLOW_ALL" } }}tg_api_key="YOUR KEY HERE"tg_network="YOU NETWORK HERE"#!/bin/bash

curl "https://binaries.twingate.com/connector/setup.sh" | sudo TWINGATE_ACCESS_TOKEN="${accessToken}" TWINGATE_REFRESH_TOKEN="${refreshToken}" TWINGATE_URL="https://${tgnetwork}.twingate.com" bashDeploying It All

Running our script 🏃♂️

The first thing we want to do is to check the script using the terraform plan command:

terraform planAll being well this will run and show you all the resources which will be added to both Twingate and Terraform.

Using Terraform Apply

As this is a brand new infrastructure you should not see anything being destroyed. Please make sure you double check what is being added and where!

...Plan: 10 to add, 0 to change, 0 to destroy.If everything looks good we can go ahead and apply the code:

terraform applyYou will need to confirm you are happy for the changes to proceed, again double check what is happening is what you expect to happen.

You should then see all the resources being created:

twingate_group.gcp_demo: Creating...twingate_remote_network.gcp_demo_network: Creating...google_compute_network.demo_vpc_network: Creating...twingate_remote_network.gcp_demo_network: Creation complete after 0s [id=UmVtb3RlTmV0d29yazoxNDg2NQ==]twingate_connector.gcp_demo_connector: Creating...twingate_group.gcp_demo: Creation complete after 0s [id=R3JvdXA6NTc1MzE=]twingate_connector.gcp_demo_connector: Creation complete after 1s [id=Q29ubmVjdG9yOjIyMDU4]twingate_connector_tokens.twingate_connector_tokens: Creating...twingate_connector_tokens.twingate_connector_tokens: Creation complete after 0s [id=Q29ubmVjdG9yOjIyMDU4]google_compute_network.demo_vpc_network: Still creating... [10s elapsed]google_compute_network.demo_vpc_network: Creation complete after 11s [id=projects/twingate-projects/global/networks/twingate-demo-network]google_compute_subnetwork.demo_subnet: Creating...google_compute_subnetwork.demo_subnet: Still creating... [10s elapsed]google_compute_subnetwork.demo_subnet: Still creating... [20s elapsed]google_compute_subnetwork.demo_subnet: Creation complete after 23s [id=projects/twingate-projects/regions/europe-west2/subnetworks/twingate-demo-subnetwork]google_compute_firewall.default: Creating...google_compute_instance.vm_instance_webserver: Creating...google_compute_instance.vm_instance_connector: Creating...google_compute_firewall.default: Still creating... [10s elapsed]google_compute_instance.vm_instance_webserver: Still creating... [10s elapsed]google_compute_instance.vm_instance_connector: Still creating... [10s elapsed]google_compute_firewall.default: Creation complete after 12s [id=projects/twingate-projects/global/firewalls/firewall]google_compute_instance.vm_instance_webserver: Creation complete after 15s [id=projects/twingate-projects/zones/europe-west2-c/instances/twingate-demo-webserver]twingate_resource.resource: Creating...google_compute_instance.vm_instance_connector: Creation complete after 15s [id=projects/twingate-projects/zones/europe-west2-c/instances/twingate-demo-connector]twingate_resource.resource: Creation complete after 1s [id=UmVzb3VyY2U6MjE4NzU0Ng==]

Apply complete! Resources: 10 added, 0 changed, 0 destroyed.Granting access

This part could be achieved via code, however it’s more likely you will be managing this manually from the interface due to other integrations, i.e. Entra ID (formerly Azure AD).

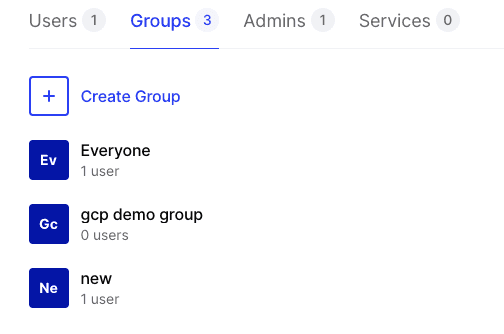

Navigate to Team → Groups

You will see the newly created group in there:

Click on this group, then users and add your user account.

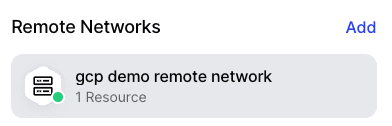

You should also see the new network:

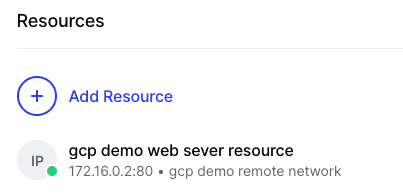

And resource that has been created:

Testing the connection

Provisioning the virtual machines can take a few minutes, so you may want to pause at this point for 5 minutes to give everything time to spin up.

When the connection has been established it will be visible from the Twingate UI:

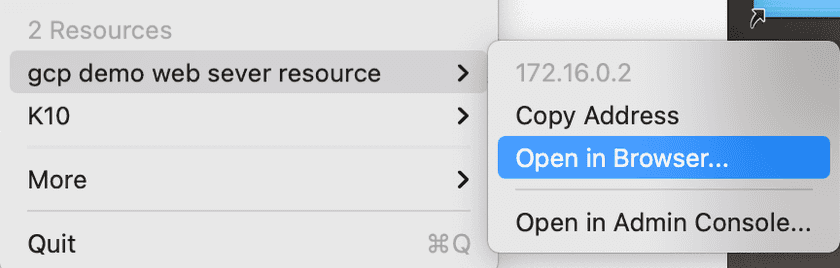

Next we can test the connection the web server over Twingate. To do this close your Twingate client if it is open, then re-open it. You should see the new resource as an option:

Click to open this resource in a web browser, you should now see the test page on the web server!

Last updated 7 months ago