Connector Best Practices

Choosing a Connector deployment method

There are a range of Connector deployment options available for many types of environments. See our Help Me Choose guide to select the right Connector deployment for your scenario.

Best Practices

For context, it’s helpful to review Understanding Connectors first. With that in mind, we recommend the following:

- Deploy as many Remote Networks and Connectors as you need based on your network architecture. There is no advantage to forcing network traffic to flow through a single or smaller number of Connectors. One advantage of Twingate is that traffic will be automatically routed to a Connector associated with a Resource. Users will also be simultaneously connected to multiple Connectors if accessing Resources across multiple Remote Networks. When deploying Twingate for Resources on multiple network segments, an ideal approach is to define multiple Remote Networks (and deploy associated Connectors) to accommodate each segment.

- For redundancy, always deploy a minimum of two Connectors per Remote Network. Multiple Connectors in the same Twingate logical Remote Network are automatically clustered for load balancing and failover, which means that any Connector can forward traffic to Resources on the same Twingate Remote network. Two Connectors per Network should be sufficient for most use cases, but adding additional Connectors may helpful if you anticipate a large number of simultaneous connections and/or high bandwidth use cases.

- Each Connector must be provisioned with a unique pair of tokens. Each Connector you deploy must have its own unique pair of tokens. Tokens are generated when you provision a Connector in the admin console, so you should provision one Connector application per Connector deployed in the Twingate Admin Console. Attempting to re-use tokens for multiple Connectors will result in a connection failure for the Connector.

- Connectors on the same Twingate Remote network should have the same network scope and permissions. Because Connectors on the same Twingate Remote network are clustered, they are intended to be, and should be configured to be interchangeable. Network permissions should be the same for every Connector to ensure that Resources are always accessible, regardless of which Connector is in use.

- Connectors should be deployed on the same network as target Resources, if possible. A significant benefit of establishing your Twingate network is that traffic is routed directly from users’ devices to the Resource they are accessing. Because Connectors serve as the destination exit point for traffic, it’s important that the “last mile” between the Connector and any Resources it serves is as short as possible to provide users with the best performance.

Network Requirements

Regardless of your chosen deployment method, the following principles apply:

-

Connectors only require outbound Internet access. Inbound Internet access to a Connector host is neither required nor recommended from a security standpoint.

If you wish to limit outbound connectivity, you may limit to:

- Outbound initiated TCP Port:

443(basic communication with the Twingate Controller and Relay infrastructure) - Outbound initiated TCP Ports:

30000-31000(opening connections with Twingate Relay infrastructure in case peer-to-peer is unavailable) - Outbound initiated UDP and QUIC for HTTP/3 (see this guide for more information) Ports:

1-65535(allows for peer-to-peer connectivity for optimal performance)

- Outbound initiated TCP Port:

-

Ensure that Connectors have both permission and routing rules to access private Resources. Resources that you configure in the Admin console will be resolved and routed from the Connector host. If ICMP traffic is a requirement for your environment, ensure that your Connector host has permission to send ICMP traffic to the target Resources.

These same principles apply if you are using Connectors as public exit nodes, except that you need to ensure that the Connector host has a static public IP assigned to it, either directly or via a NAT Internet gateway. This is the IP address you’ll use to whitelist in the service to be use with the exit node.

Hardware Considerations

In general, the host that the Connector is running on should be optimized for, in decreasing order of importance:

- Network bandwidth

- Memory

- CPU

If a Connector host becomes resource-bound, you can deploy additional Connectors within the same Remote network. Twingate will automatically load balance across multiple Connectors in the same logical Remote Network that you define within Twingate.

Twingate Connectors are optimized to run on the recommended hardware configurations for the platforms on which they’re deployed. If you encounter bandwidth or performance issues, the best course of action is to deploy additional Connectors to handle the load. Adding additional CPU or memory resources to a single Connector will not improve performance.

Below we have some platform-specific machine recommendations for Connectors.

AWS

A t3a.micro Linux EC2 instance is a cost-effective choice and sufficient to handle bandwidth for hundreds of remote users under typical usage patterns.

Google Cloud

An e2-small machine is sufficient as a starting point for hundreds of remote users under typical usage patterns.

Azure

For Azure, we recommend using their Container Instance service, which does not require hardware selection.

Please note that if you are using a custom DNS server for your VNet then these will not be recognized by the Container Instance service automatically. In this situation we recommend using the ‘Custom DNS’ option when deploying your Connector and specifying your DNS server manually.

On-premise / Colo / VPS

A Linux VM allocated with 1 CPU and 2GB RAM is sufficient to handle hundreds of remote users under typical usage patterns.

- Any Linux distribution that supports deployment via systemd, Docker or Helm on an x86, AMD64, or ARM-based system is acceptable.

Connector Load Balancing & Failover

If you deploy multiple Connectors within the same logical Remote Network, Twingate will detect this and you will automatically benefit from Connector load balancing and failover redundancy.

When there is more than one Connector in a Remote Network, Twingate takes advantage of the redundancy and will automatically distribute clients connecting to Resources on that Remote Network among the various Connectors for load balancing purposes. If a client is unable to connect to a particular Connector (e.g. because the machine on which the Connector is installed goes offline), the client will try connecting to other Connectors in the same Remote Network until a connection is successfully established.

If a Connector is later removed from or added to a Remote Network, Twingate will automatically adjust for that change.

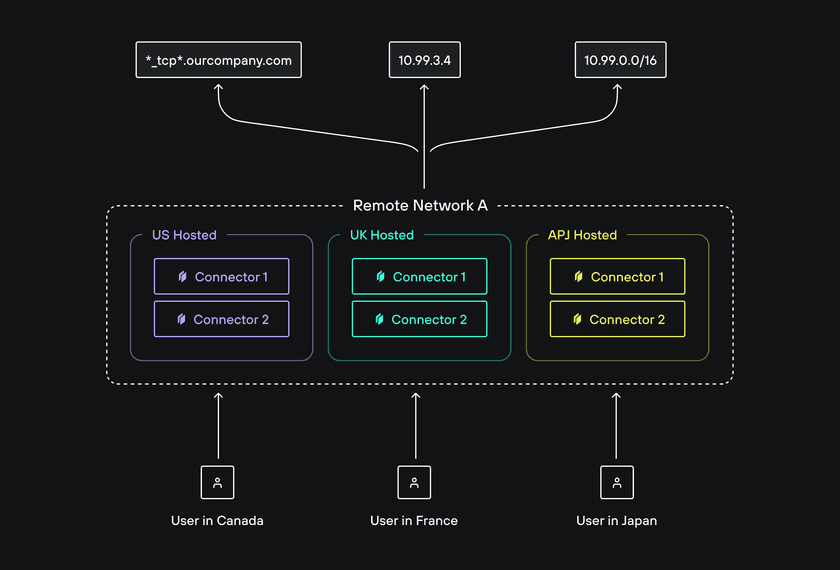

Geographic routing

In situations where you have replicated services that are hosted in multiple geographic locations, you can deploy Connectors in each location to ensure that users are routed to the nearest Connector. This can help to reduce latency and improve performance. Twingate will automatically route users to the nearest Connector based on their location.

To take advantage of geographic routing, you will need to create a single Remote Network to host all of the Resources that are replicated across multiple locations and deploy Connectors in each location. Users will then be routed to the nearest active Connector.

Last updated 3 months ago