How to Secure Site-to-Site Connections with Twingate

Configuring site to site connectivity with Twingate

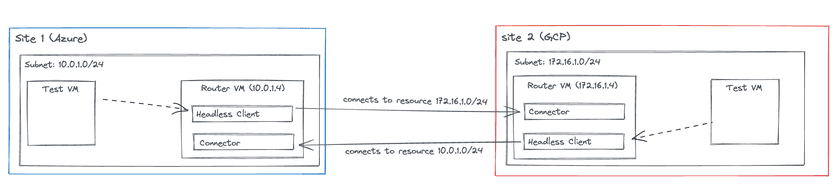

This guide describes how to configure Twingate to allow traffic to route between sites. The Twingate Client in headless mode will forward traffic destined for a specific IP address or subnet to a Twingate Connector in a remote site. The following diagram illustrates the approach we will implement in this guide:

Peer-to-peer connections help you to provide a better experience for your users and to stay within the Fair Use Policy for bandwidth consumption. Learn how to support peer-to-peer connections.

Overall Twingate Setup

At a high level, we will configure Twingate as follows:

- Set up new Remote Networks for Azure (site 1) and GCP (site 2)

- Set up new Connectors in both sites

- Set up new Service Accounts (and Twingate Clients in headless mode) in both sites

Setting up Azure (site 1)

On the Azure side, we will need to complete the following tasks:

- Set up a new Resource group

- Set up a new virtual network (vnet) and subnet

- Deploy the Twingate Connector

- Deploy the Twingate Client in headless mode

- Deploy a test VM for connectivity testing from site 2 to site 1

Setting up GCP (site 2)

- Set up a new VPC and subnet

- Deploy the Twingate Connector

- Deploy the Twingate Client in headless mode

- Deploy a test VM for connectivity testing from site 1 to site 2

Twingate Setup

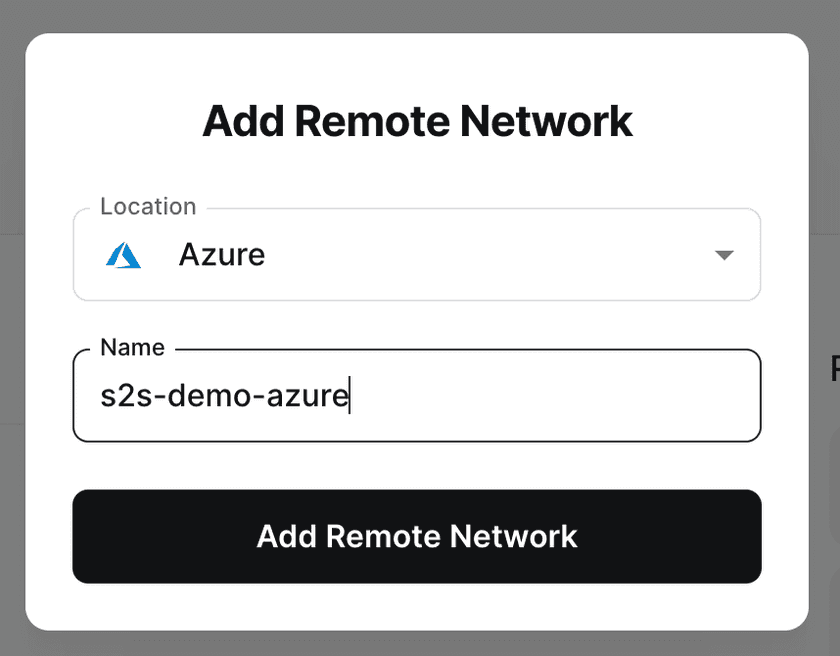

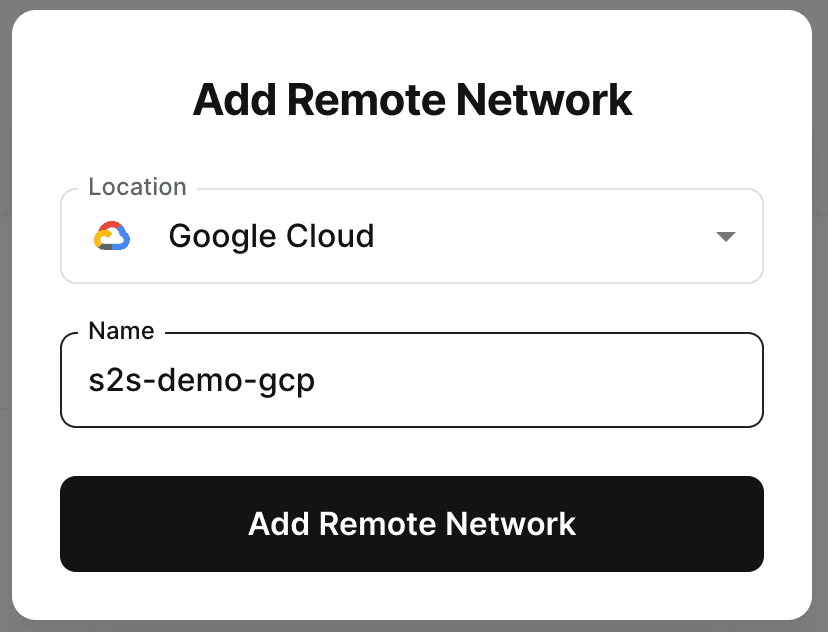

Set up new Remote Networks for Azure (site 1) and GCP (site 2)

Navigate to your Networks tab in the Twingate Admin Console and add a Remote Network for each site:

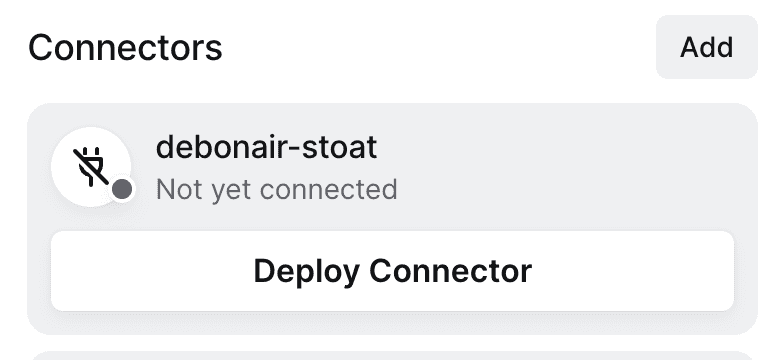

Create a Connector for site 1 (Azure)

Navigate to your new Azure Remote Network in the Twingate Admin Console and click on the Deploy Connector button:

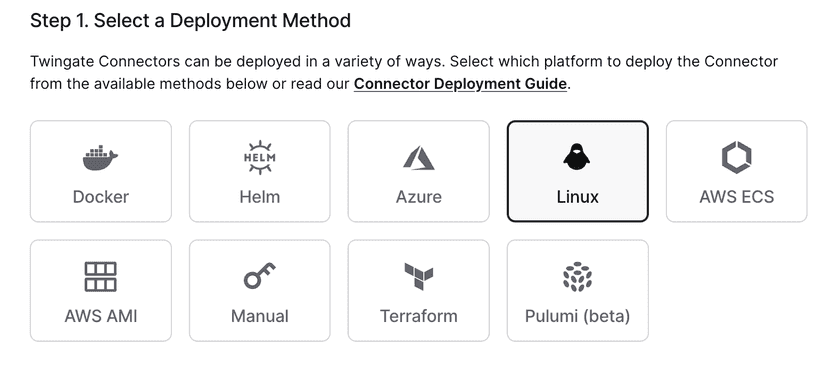

At this point you can choose to deploy to Azure, but because the approach described in this guide uses ACS which requires its own vnet, we will just be using a basic Linux deployment in Azure to keep things simple.

Select Linux from the options:

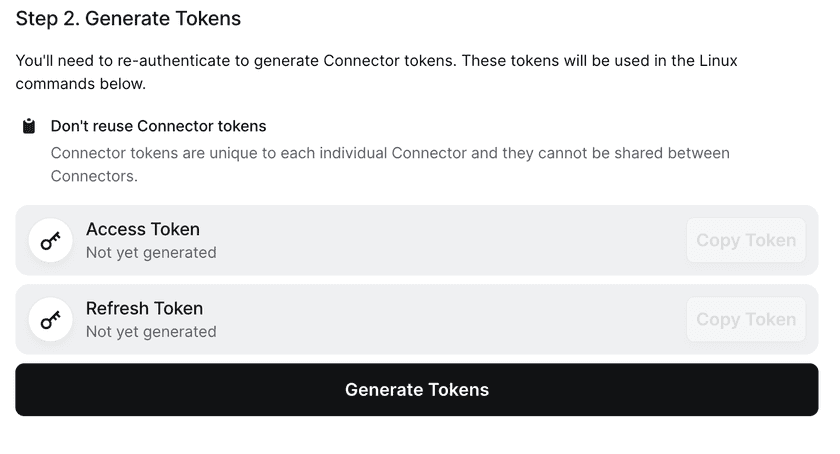

Scroll down and generate your tokens:

Finally, copy the command at the bottom of the screen and keep it safe. We will use this command to deploy the Connector in Azure.

Create a Connector for site 2 (GCP)

Follow the same steps as above to create a new Connector in the GCP Remote Network.

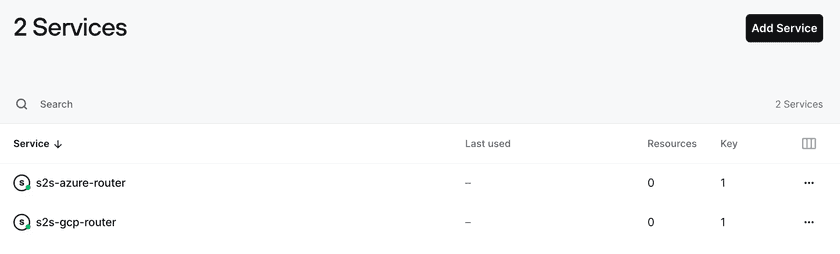

Create Service Accounts

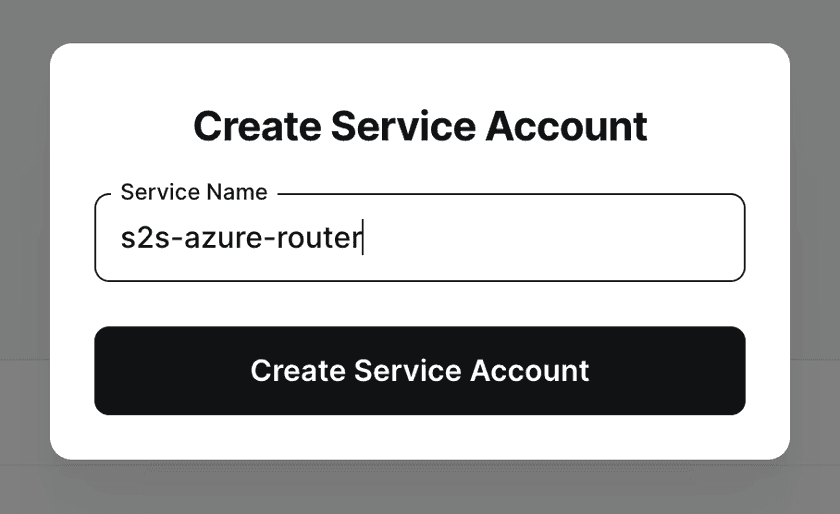

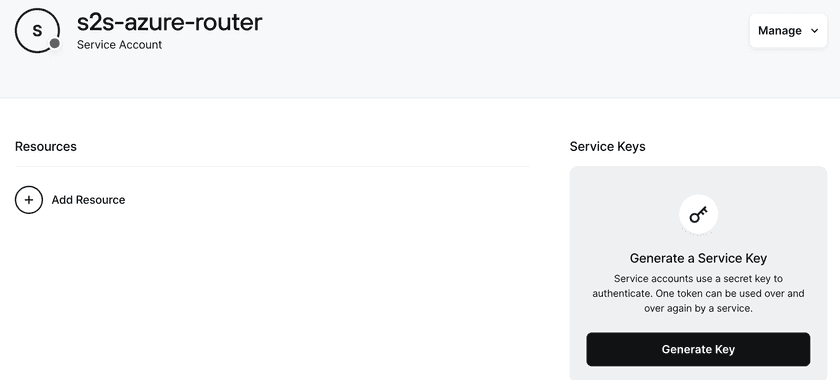

We will be using Service Accounts to authenticate the Twingate Clients in headless mode on both sites. In your Admin Console, navigate to Team, then Services, and click on Add Service to create two Service Accounts:

When generating a key for each account, make sure you copy it and store it somewhere safe. We will need it when deploying Twingate Clients in headless mode.

Site 1 setup (Azure)

Setup

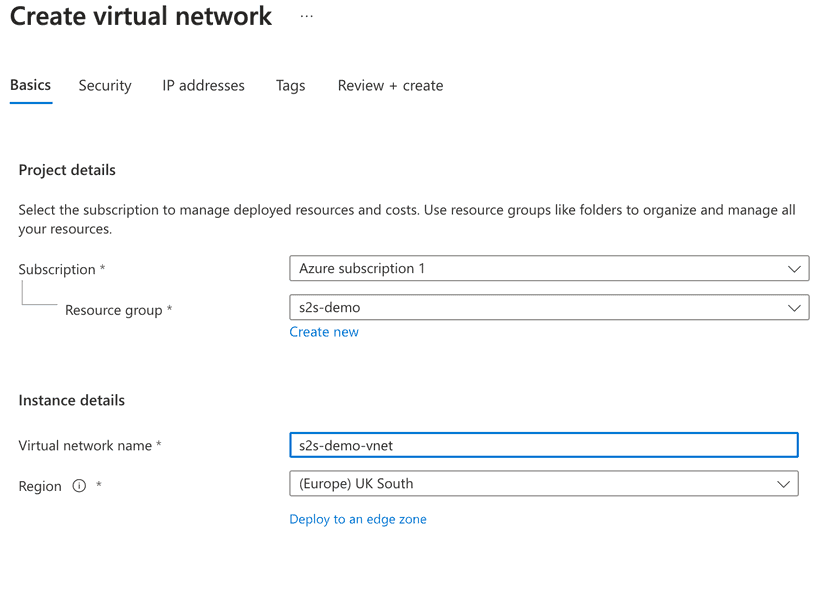

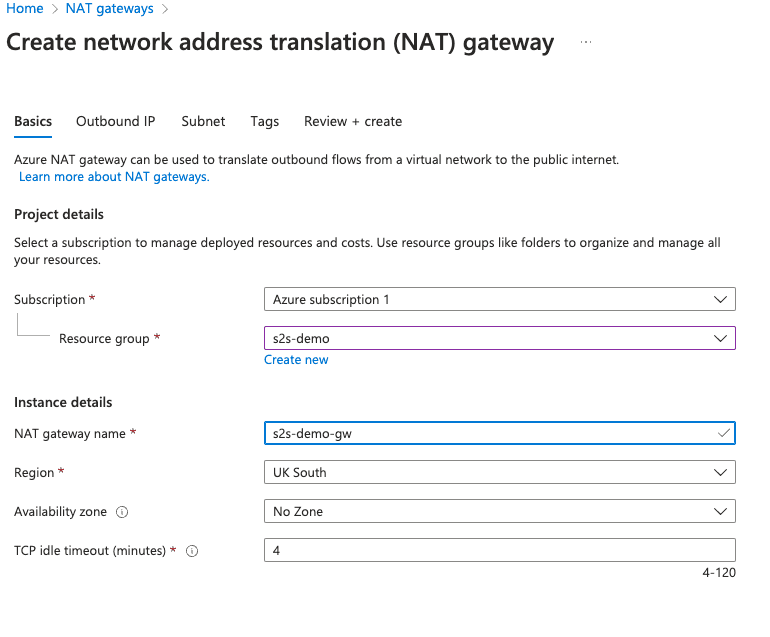

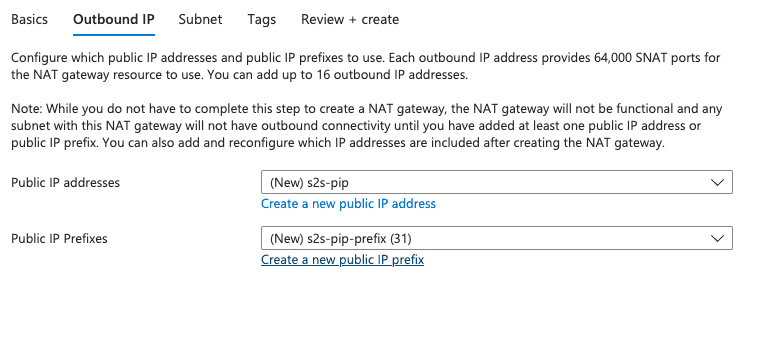

Before deploying the Connector, we will create a new Azure resource group, Azure network, NAT gateway and subnet that will contain the Connector along with the test VM. Navigate to the Azure portal to create these items:

Create a Resource Group

Create a vnet

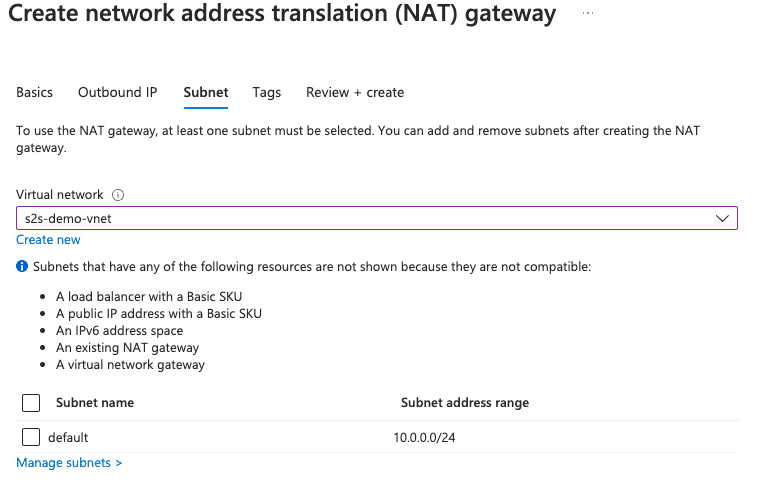

Create a NAT gateway

Note that you do not need to select a subnet at this point as we will create it during the next step and associate it to our NAT gateway.

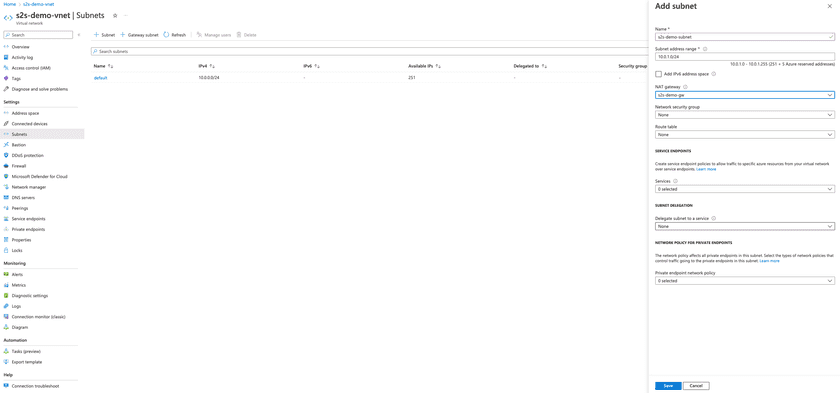

Create a subnet

When creating a subnet, you should assign the previously created NAT gateway to it.

Deploy the site 1 Connector

We will be using a standard Linux VM to run the Connector.

Make sure to disable the public IP address on the Connector host VM and configure NAT so it can access the internet.

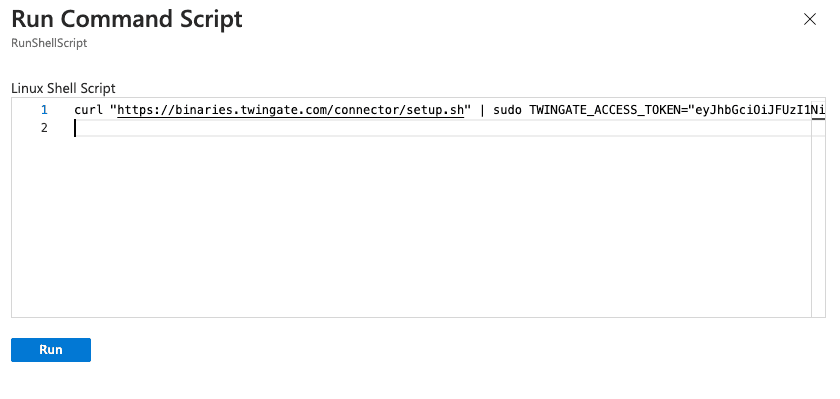

If you don’t have SSH access to the VM, you can use the Run command option on the VM to deploy the Connector, as shown below:

Next, copy and paste the Connector creation command you previously generated in the Admin Console (the same command can be used via SSH as well):

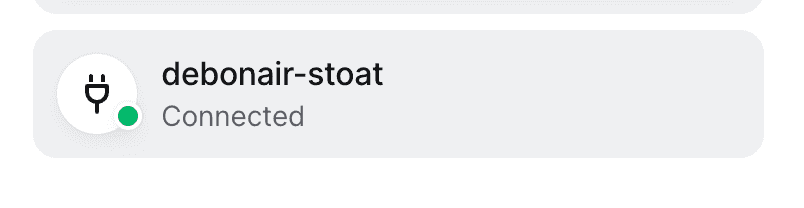

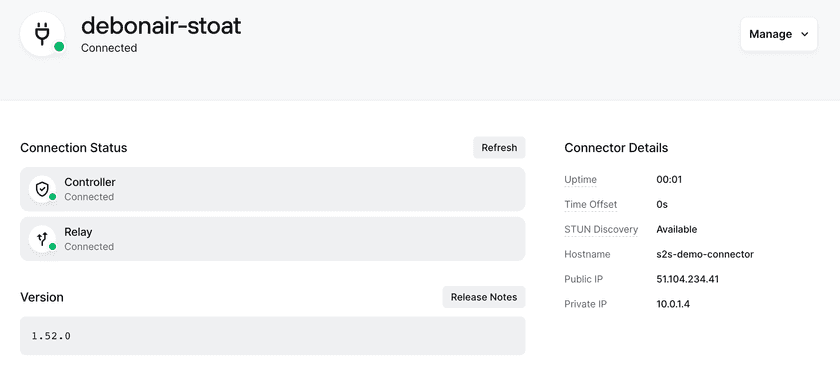

After a few moments, you should see the Connector go live in the Twingate Admin Console:

Deploy the Twingate Client in headless mode on the router VM (site 1)

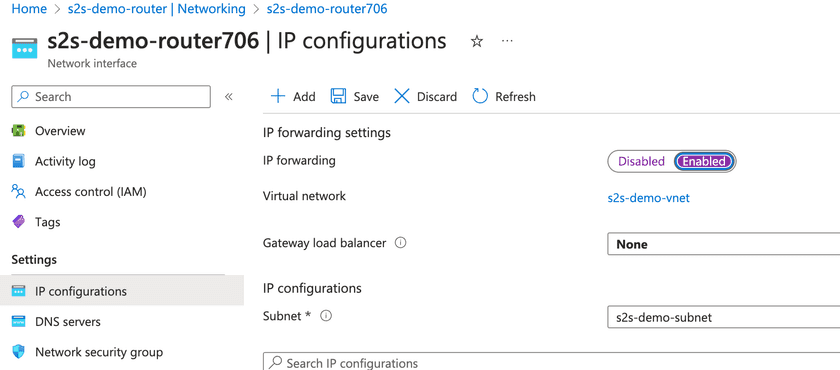

Deploy another Linux VM (no public IP address needed). It will serve to route traffic from the network and through the Twingate Client. Once connected to it via SSH, enter the following command in the CLI and ensure you have enabled IP forwarding after the VM is deployed:

Note that if you don’t have remote access to this new VM, you can add its private IP address as a Resource in Twingate and gain access to it via the Twingate Client.

curl https://binaries.twingate.com/client/linux/install.sh | sudo bashOnce run, we need to configure the service key by creating a new file:

nano /tmp/service_key.jsonCopy the contents of the key retrieved earlier into this file and save it.

Run the following command to configure Twingate to use this key:

sudo twingate setup --headless /tmp/service_key.jsonYou will see that this has completed the setup:

Twingate Setup 1.X.XX+XXXXX | X.XXX.XCopying service keySetup is complete.Start the Twingate Client:

sudo twingate startThe Client should start and report as online:

Starting Twingate service in headless modeTwingate has been startedWaiting for status...onlineNow that our router VM is configured with a Twingate Client, we will need to set it up to route the traffic from inside the network.

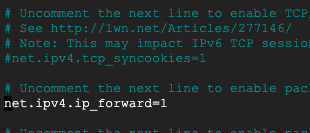

First, allow IP forwarding by editing /etc/sysctl.conf:

sudo nano /etc/sysctl.confScroll down and uncomment net.ipv4.ip_forward=1:

Save and close the file.

Run the following command to apply the changes:

sudo sysctl -pNext, we need to make changes to iptables on the router VM:

First, add a rule to allow traffic from the inside interface to be passed through the forward chain, tracking it as a new connection.

Note that you will likely need to replace the names of the interfaces ens4 and sdwan0 with your own before running the commands below.

ens4is the internal private network interface of the router VMsdwan0is the virtual network interface created by the Twingate Client

sudo iptables -A FORWARD -i ens4 -o sdwan0 -j ACCEPTSecond, add a rule to ensure that returned tracked traffic originating from inside is allowed to return:

sudo iptables -A FORWARD -i sdwan0 -o ens4 -m state --state RELATED,ESTABLISHED -j ACCEPTThird, add a rule for any traffic in the NAT table (which includes the forward chain) to forget the source IP address and uses the IP address of the Twingate Client network interface (sdwan0 in this case). This ensures NAT for traffic where the destination IP address matches that of a Twingate Resource.

sudo iptables -t nat -A POSTROUTING -o sdwan0 -j MASQUERADETo ensure these rules remain when the router VM is restarted, we can optionally install the iptables-persistent package:

sudo apt install iptables-persistent -yEnter YES on both screens when they pop up.

Our router VM configuration is now complete!

Deploy a test VM

To demonstrate communications between the two sites, we will deploy a basic VM to a network. This can be a simple Linux VM in the same subnet as the router VM.

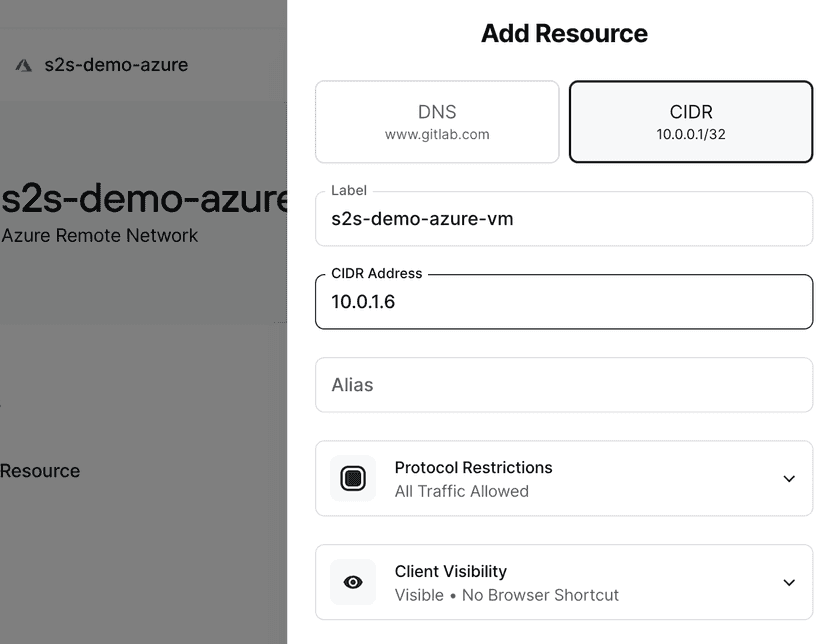

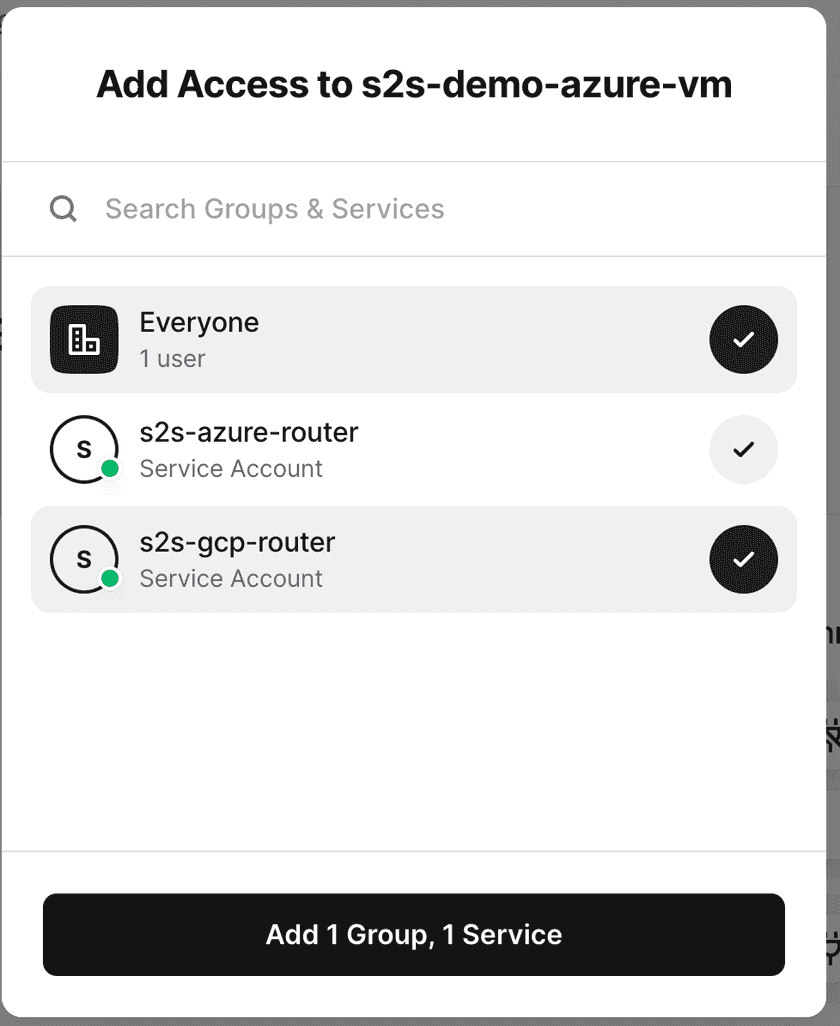

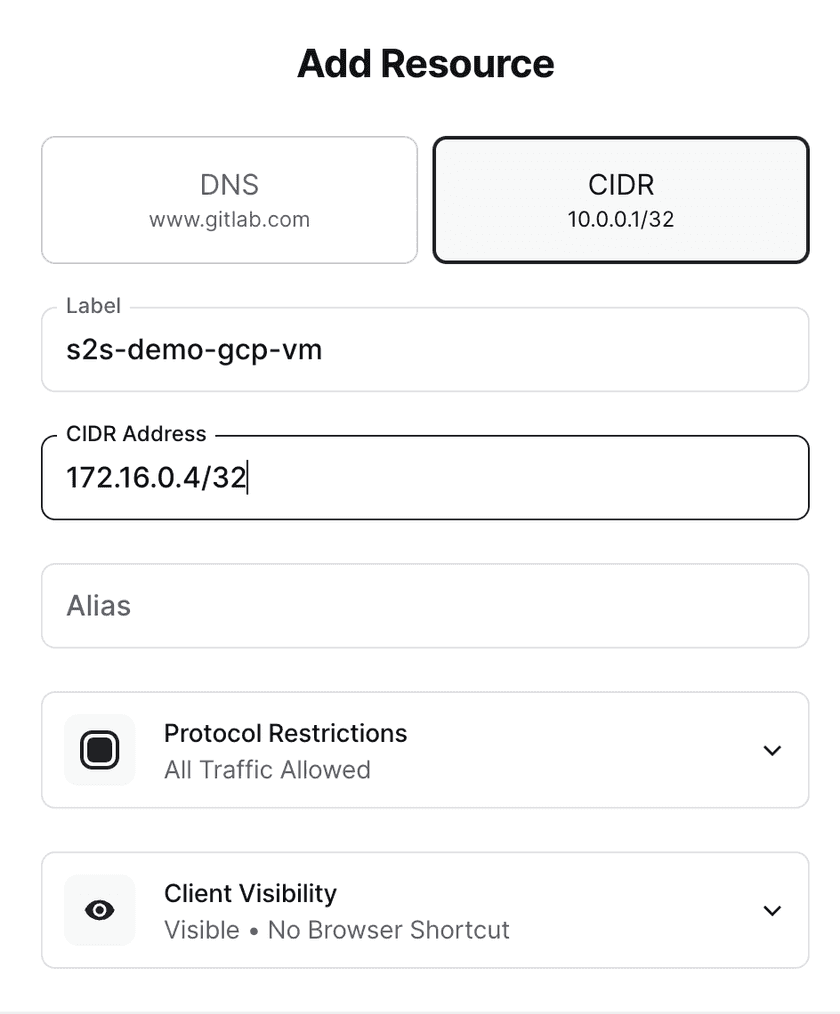

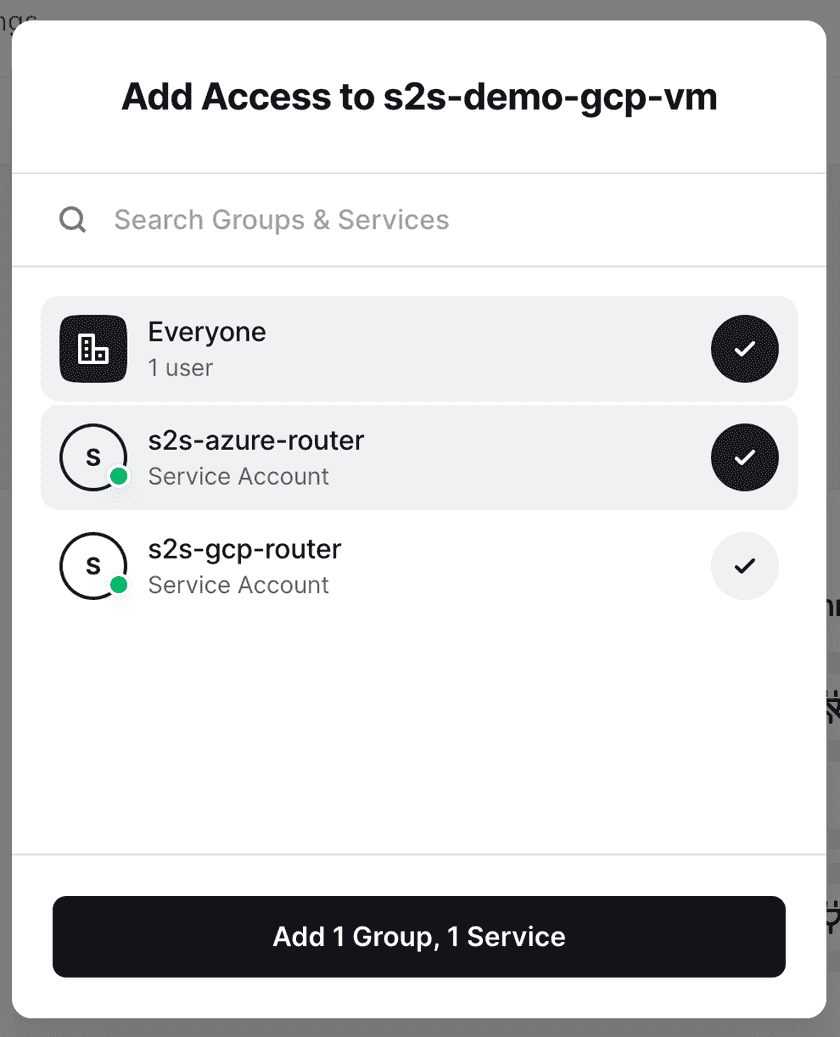

Once this is deployed, we can add it as a Resource in Twingate inside the Azure Remote Network. Assign the Resource to the Service Account created for site 2:

Site 2 setup (GCP)

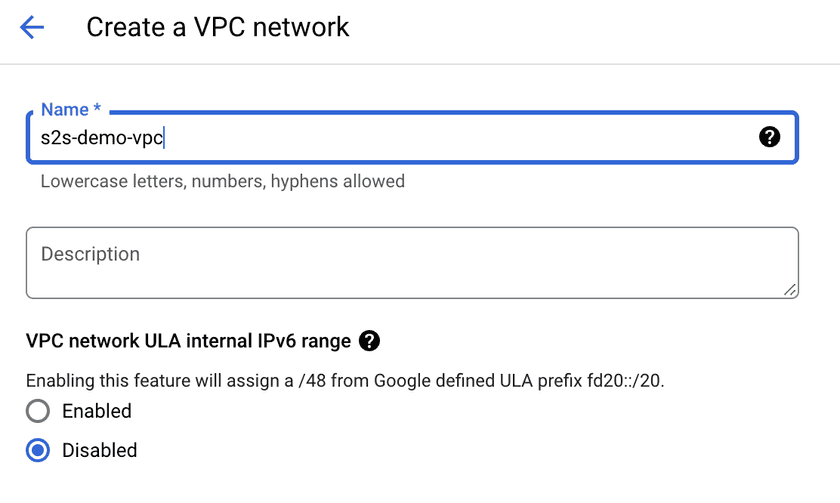

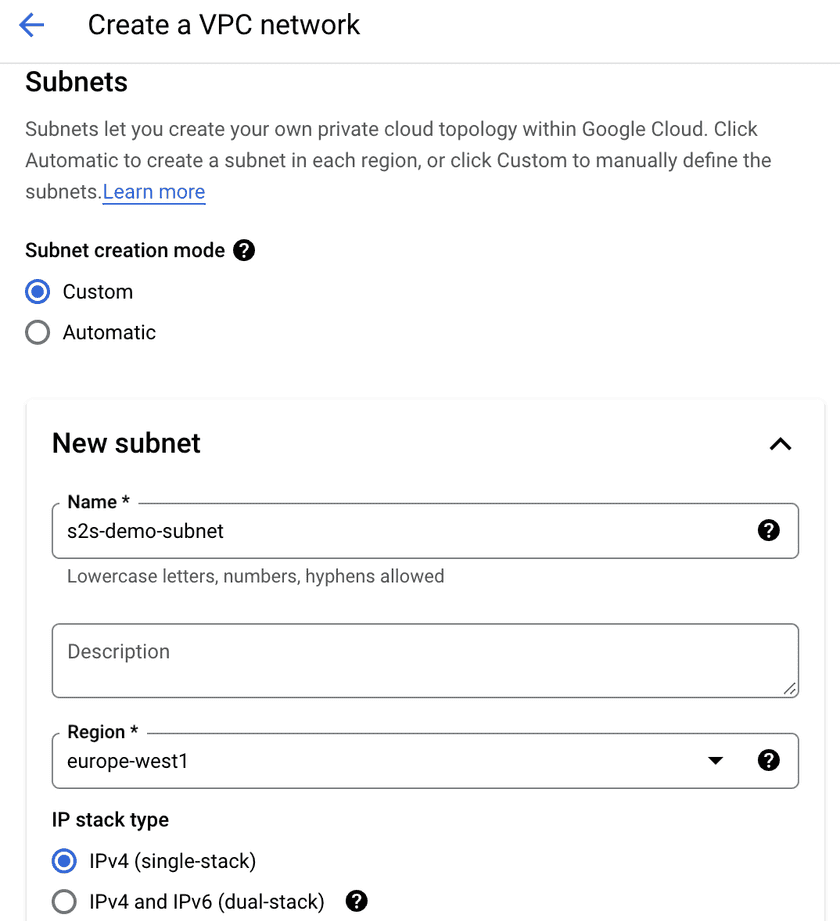

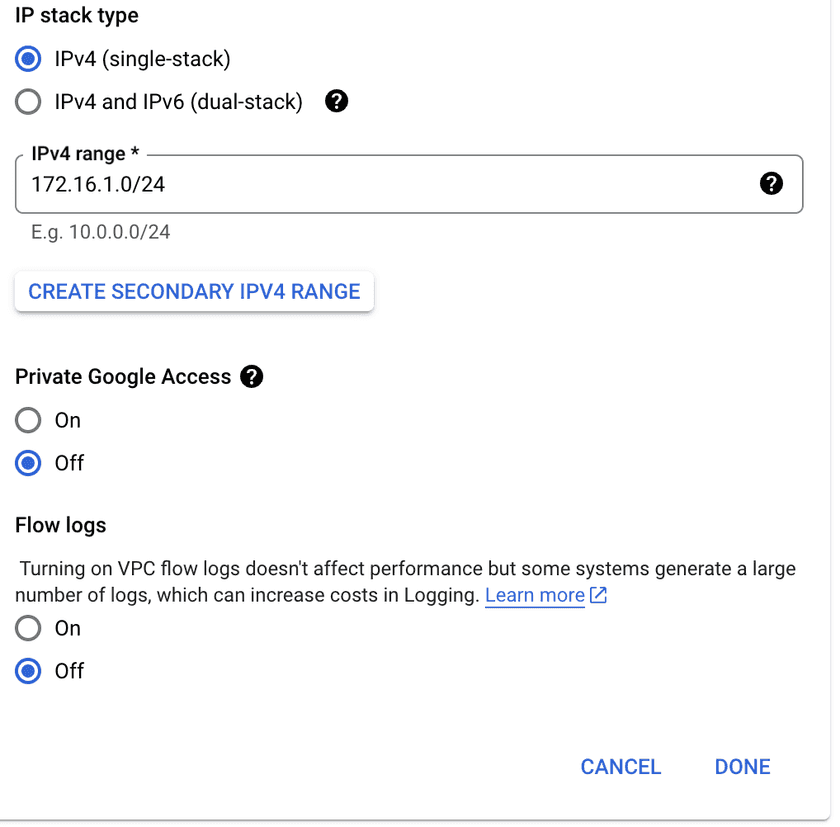

Similar to the Azure setup, we will create a new VPC and subnet which will contain our resources:

Deploy the site 2 Connector

Similarly to our setup in Azure, you should disable the public network interface and use Cloud NAT to access the internet. Make sure Cloud NAT is set up before deploying the router VM, otherwise it will not be able to install the Twingate Connector.

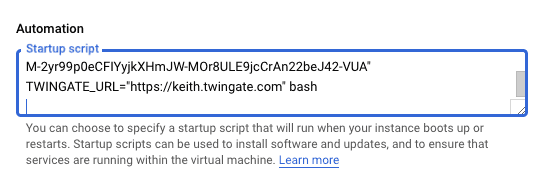

Deploy the Connector by creating a new Linux VM in the newly created VPC and subnet, running through the same instructions as above, but using the command stored for the site 2 (GCP) Connector.

(You can use an automation script to install the Connector without needing remote access to the VM.)

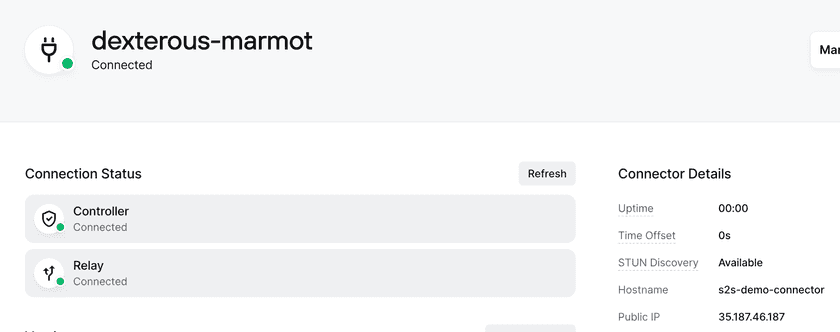

Once deployed, you should verify that the Connector shown as online:

Deploying the Twingate Client in headless mode

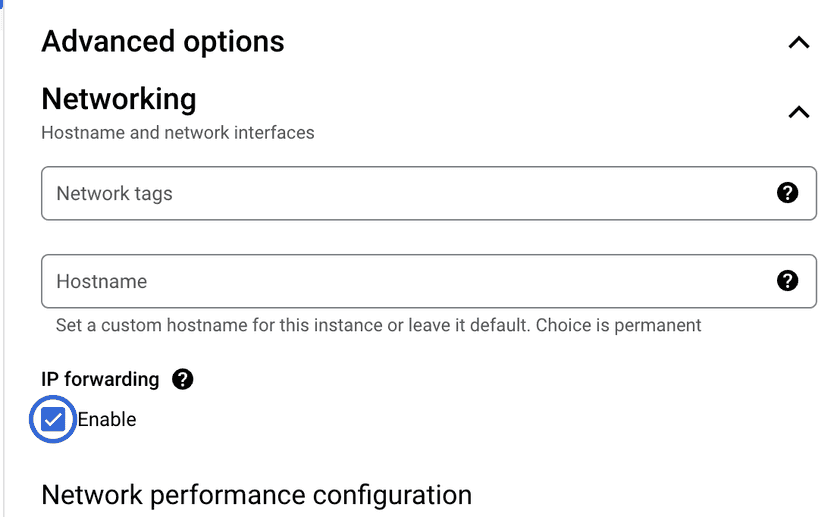

This is identical to the process followed for site 1 (Azure). However, you do need to enable IP forwarding when creating the VM itself (this is specific to GCP):

Follow the same steps in order to deploy the Twingate Client in headless mode and remember to use the Service Account key created earlier for the GCP service.

Once run, you should see it connect successfully:

Starting Twingate service in headless modeTwingate has been startedWaiting for status...onlineSimilar to site 1, run the following commands to configure the iptables on the router VM for site 2. Remember to replace eth0 and sdwan0 with the correct values from your router VM:

sudo iptables -A FORWARD -i eth0 -o sdwan0 -j ACCEPTsudo iptables -A FORWARD -i sdwan0 -o eth0 -m state --state RELATED,ESTABLISHED -j ACCEPTsudo iptables -t nat -A POSTROUTING -o sdwan0 -j MASQUERADEDeploying the test VM

Similar to the instructions for site 1, we will create a test VM in the same VPC and subnet as the router VM and add it as a Twingate Resource:

Assign the Resource to the Service Account corresponding to site 1:

Routing and Firewalls

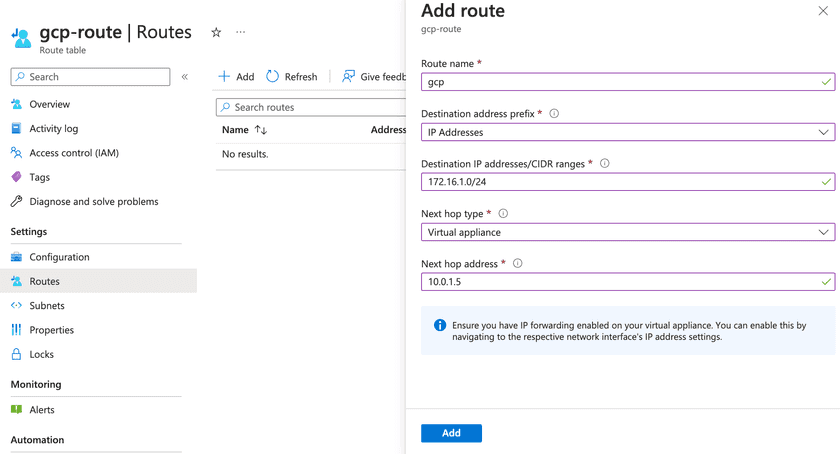

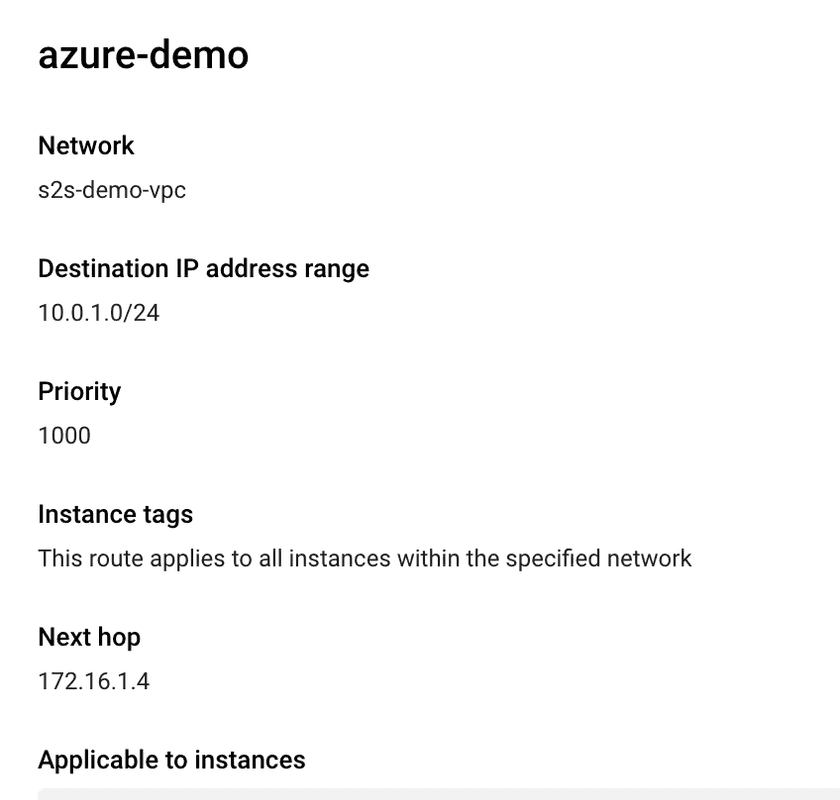

Depending on your requirements for site to site communications, routing and firewall settings may vary. In this example, we are routing all traffic from the site 1 subnet (10.0.1.0/24) to the site 2 subnet (172.16.1.0/24) and vice versa.

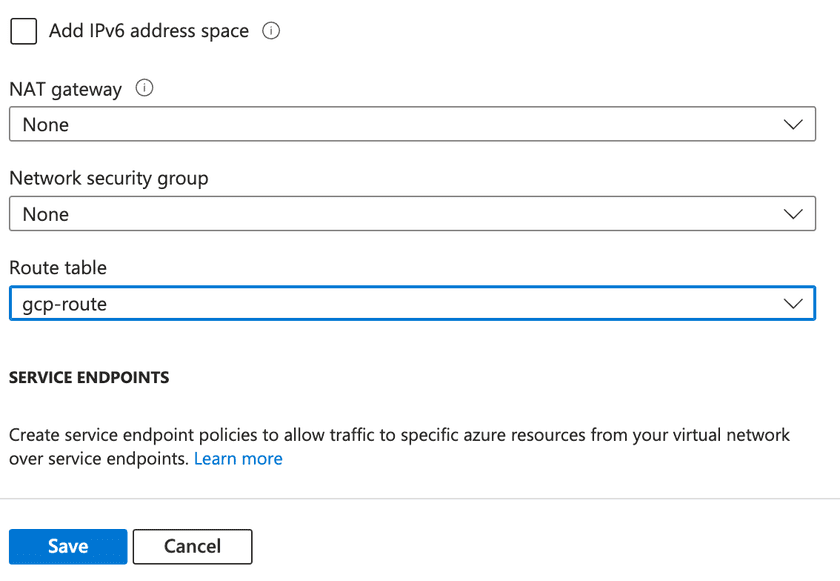

For subnet traffic to route correctly, you need to set up routing on site 1 and site 2 independently. On Azure, this can be done by creating a new routing table and associating it with your vnet:

Once created, associate this route to your subnet:

Finally, add a route in GCP as well:

Once routing is in place, we can test connectivity:

First, from GCP → Azure, run a ping from the test VM in site 2 to the test VM in site 1:

@s2s-demo-test-vm:~$ ping 10.0.1.6PING 10.0.1.6 (10.0.1.6) 56(84) bytes of data.64 bytes from 10.0.1.6: icmp_seq=1 ttl=254 time=14.8 ms64 bytes from 10.0.1.6: icmp_seq=2 ttl=254 time=10 msSecond, from Azure → GCP, run a ping from the test VM in site 1 to the test VM in site 2:

@s2s-demo-test-vm:~$ ping 172.16.1.5PING 172.16.1.5 (172.16.1.5) 56(84) bytes of data.64 bytes from 172.16.1.5: icmp_seq=1 ttl=254 time=14.4 ms64 bytes from 172.16.1.5: icmp_seq=1 ttl=254 time=10.1 msYou should now be able to route traffic between the two networks using the router VMs as a gateways!

Last updated 1 year ago